Shortcut learning minigame

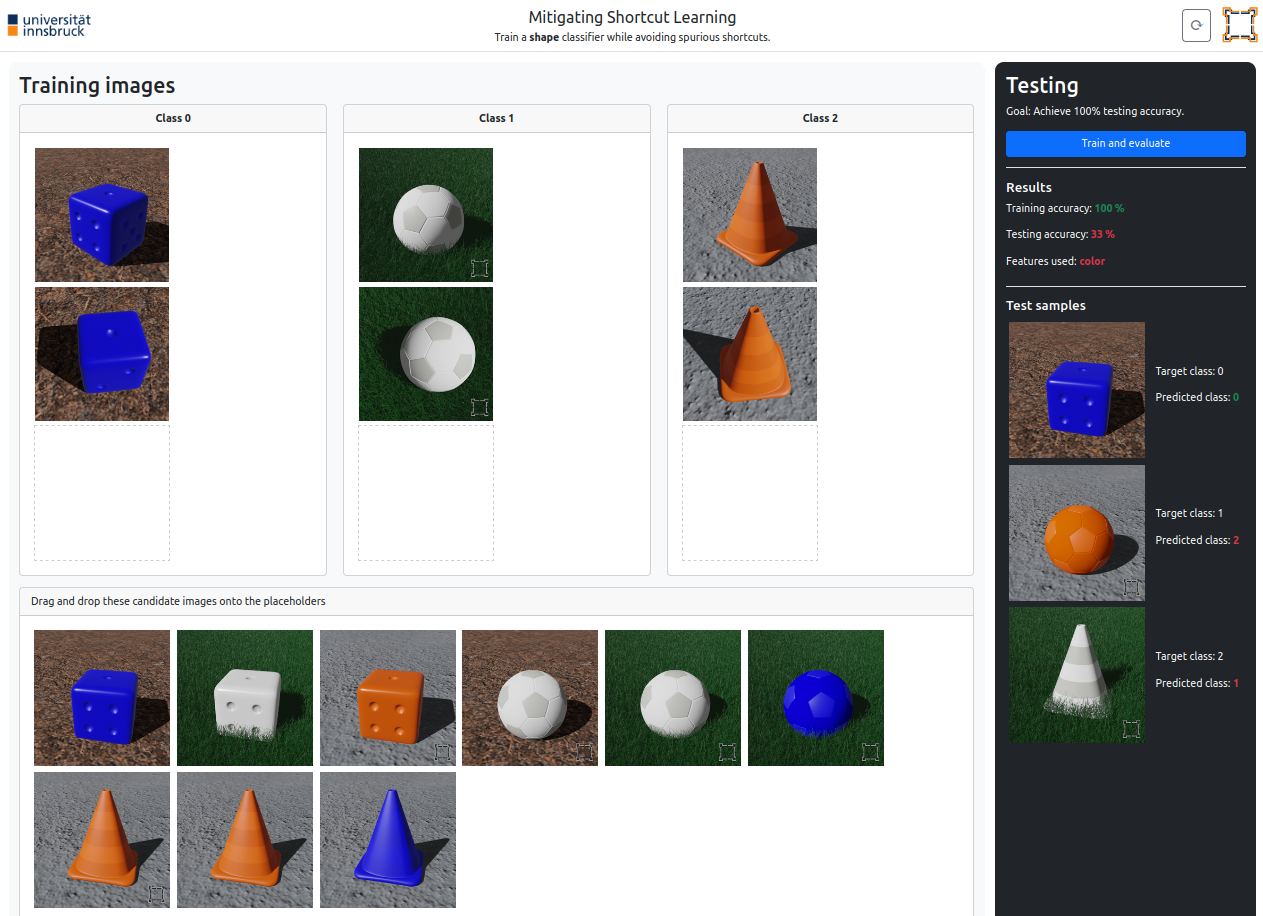

Let’s play a game. Your goal is to train a shape classifier and achieve 100% accuracy on the test images. You can drag and drop images from the bottom image pool into the training set. Watch out for the spurious features in the training images that the classifiers prefers over the shape. Choose a set of training images such that the classifier cannot solve the training set by relying on the spurious features.

I created this minigame for the student inday, an open lab day for students at the University of Innsbruck. Many students took on the challenge and solved it eventually, but only one student solved it in their first attempt. It was great to see students keep on playing and testing alternative solutions. Afterwards, we engaged in dicussions about experiences with shortcut learning and about mitigation strategies.

Shortcut learning is a common cause of generalization failures in machine learning (ML). Shortcut learning describes the susceptibility of ML methods to learn a decision rule that solves the training data but fails to generalize to other datasets. Shortcut features are usually biases in the training dataset that correlate with the classification target but are no characteristic feature of the target.

Shortcut learning often remains undetected because of the common practice to evaluate ML classifiers on a held-out subset of the same dataset as the training subset. Hence, the hold-out evaluation set contains the same biases as the training set. Shortcut learning can only be detected by evaluating generalization to another, unrelated dataset.

Understanding causes of shortcut learning is an active research area. A common hypothesis is that feature complexity plays a major role, i.e., undesired features become shortcuts when they are easier to learn than the desired decision rule. While mitigation strategies vary depending on the type of shortcut, one strategy is to attenuate the correlation between shortcut feature and target label, e.g., through data augmentation or by adding samples with an opposing shortcut feature to the training set (as in the minigame).

Finally, here are the lessons that I learned to detect and avoid shortcut learning: understand your dataset, be skeptical about unexpectedly high performance, and test a classifier’s generalization ability beyond the hold-out evaluation set.